Just now, OpenAI released three new models:

GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano.

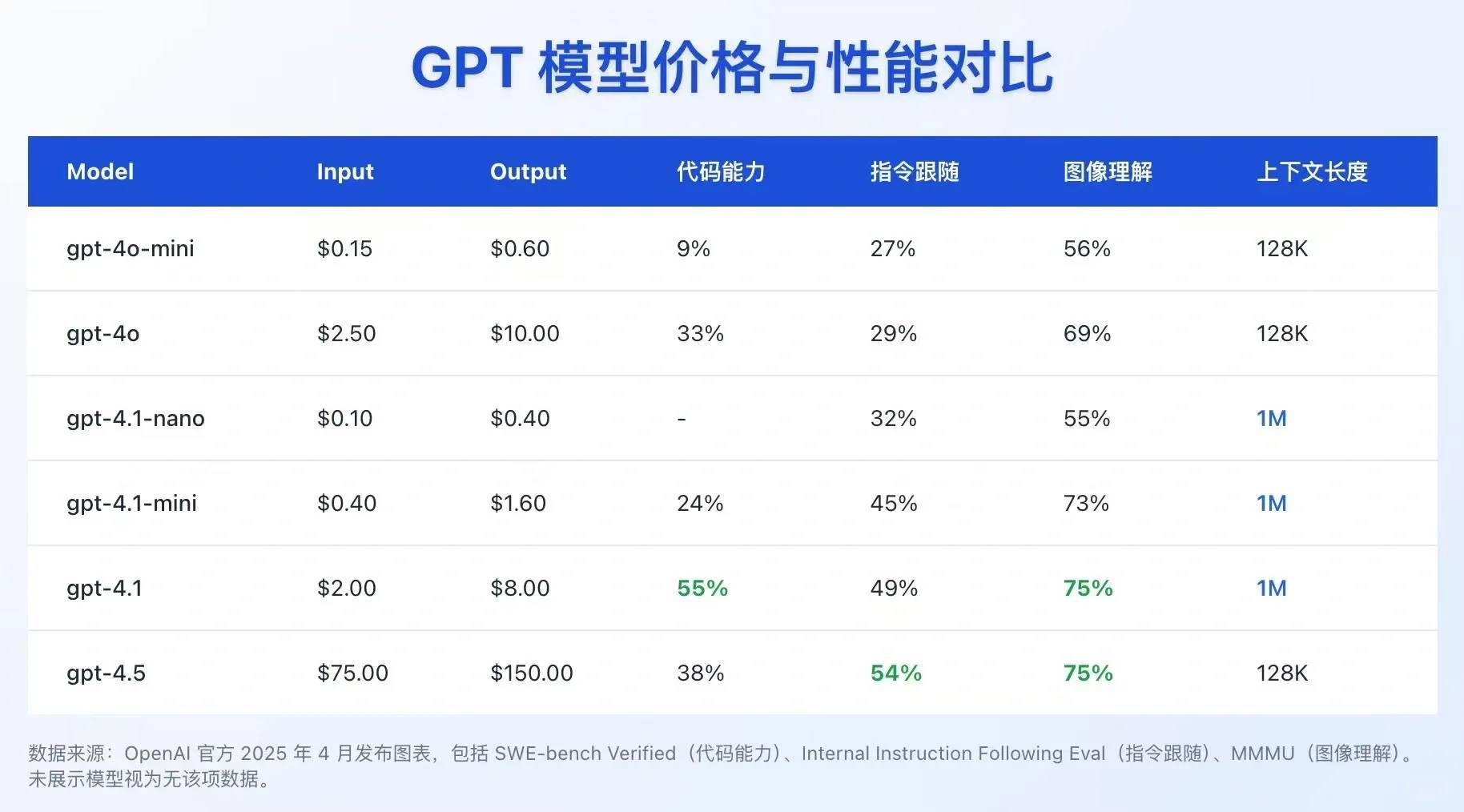

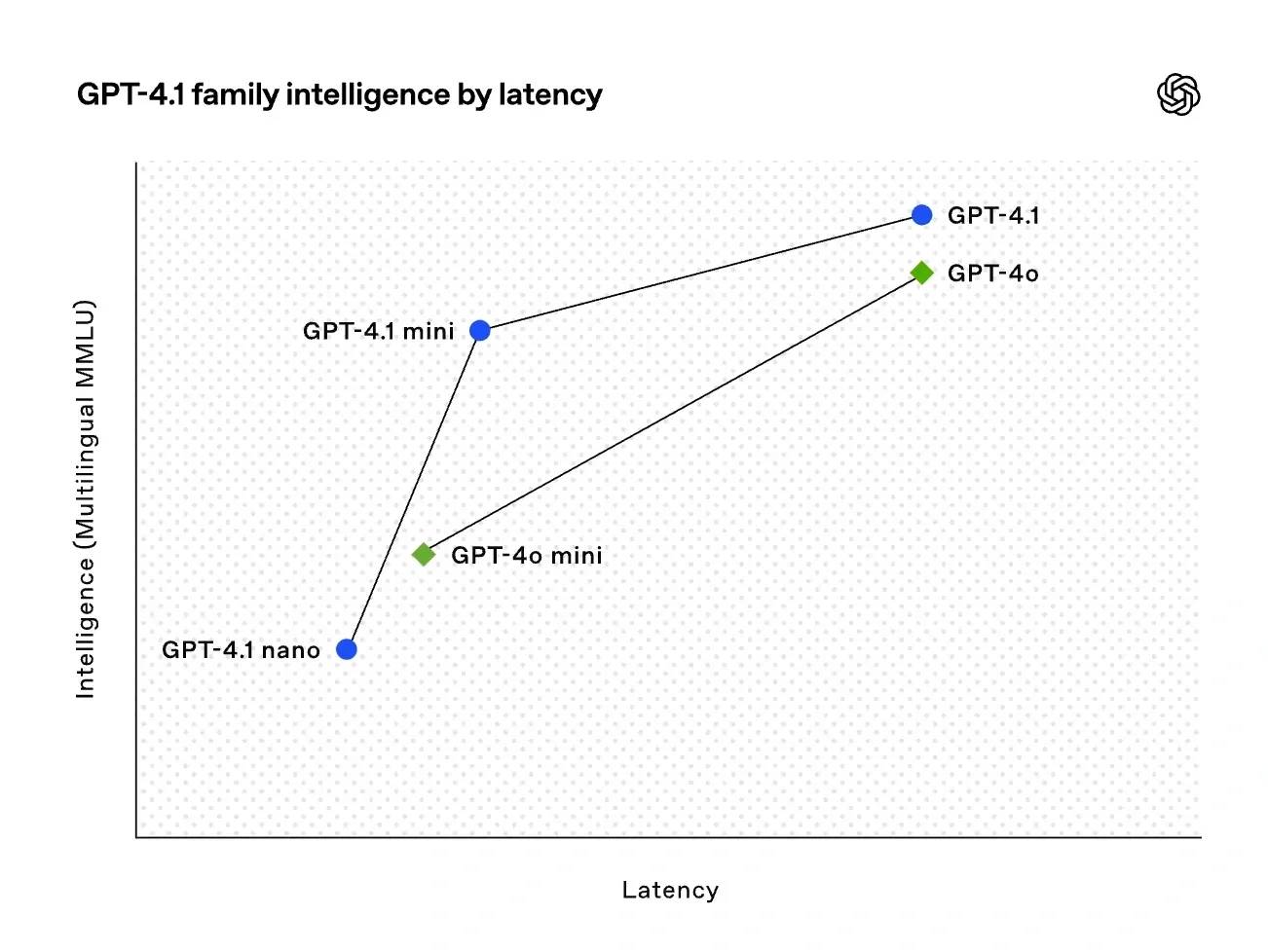

These models comprehensively outperform GPT-4o and GPT-4o mini (Figure 2️⃣).

They’re even better than GPT-4.5 (a bit abstract—4.1 > 4.5 🤣).

(But they’re only available via API.)

The GPT-4.1 family shows significant improvements in coding and instruction-following.

All models feature a 1-million-token context length (Figure 3️⃣, from Cyber Zen Heart).

Core comparison of the GPT-4.1 family ⬇️

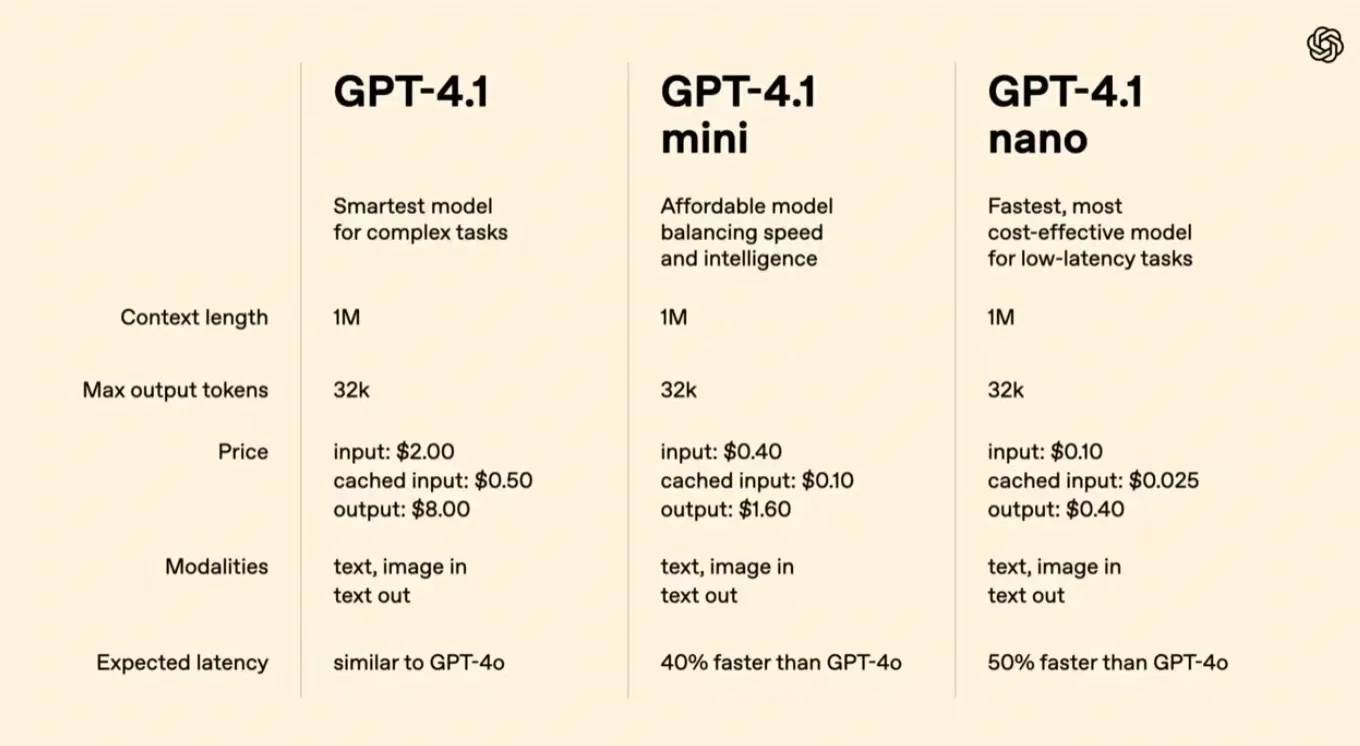

1️⃣ Model Positioning & Configurations

· GPT-4.1: The optimal model for complex tasks

Max output: 32k tokens

Modality: Text, image input + text output

· GPT-4.1 mini

Positioning: Balanced speed and cost

Same configuration as GPT-4.1 but lower price

· GPT-4.1 nano

Positioning: Low latency, high cost-efficiency

Same family configuration, 50% faster latency than 4o

2️⃣ Performance

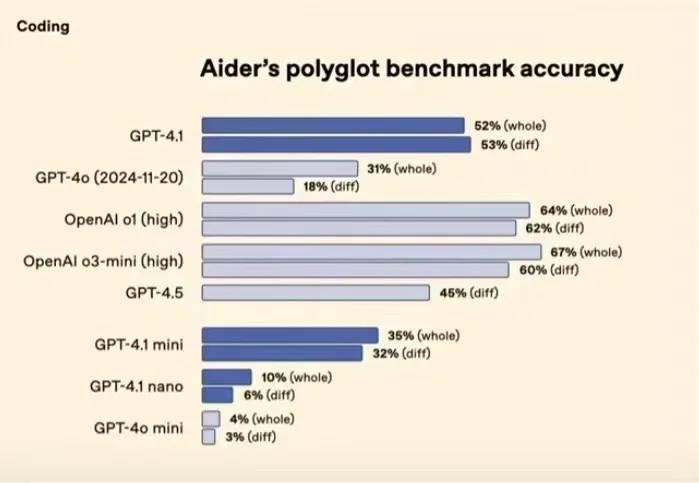

◦ Coding tasks (Aider benchmark, Figure 4️⃣)

▪ 4.1 (52%), 4.1 mini (35%), 4.1 nano (10%)

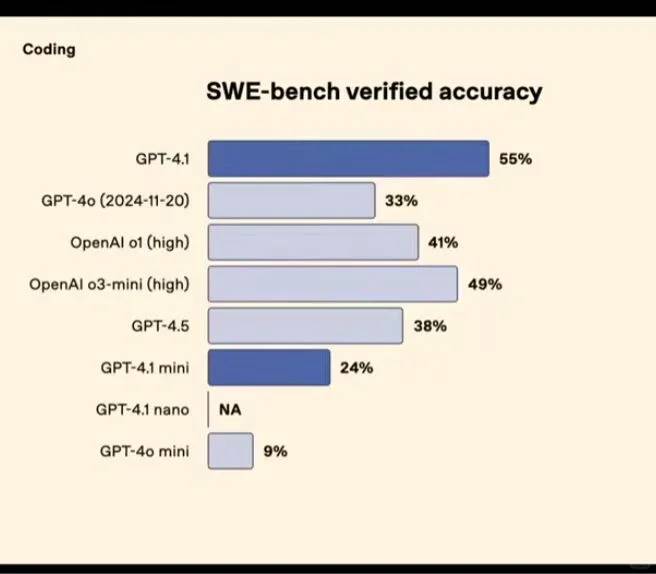

◦ SWE-bench (Figure 5️⃣)

▪ 4.1 (55%), mini (24%)

◦ Video tasks (Video-MME)

▪ 4.1 (72%) significantly outperforms 4o (65%)

3️⃣ Pricing & Cost

◦ Input cost (per million tokens)

▪ 4.1 ($2.00), mini ($0.40), nano ($0.10)

◦ Output cost (per million tokens)

▪ 4.1 ($8.00), mini ($1.60), nano ($0.40)

The GPT-4.1 series offers different tiers (standard, mini, nano)

to meet needs from complex tasks to low-cost real-time scenarios.

Recommended use cases ⬇️

◦ High-performance needs: G4.1 or o3-mini-high (67% accuracy)

◦ Real-time, low latency: 4.1 nano (fastest and cheapest)

Nano is currently the fastest + most affordable model.

Follow me for more high-quality updates—

I’ll keep bringing you the latest AI industry trends and insights 😎

@TechPotato

Wow, the 1-million-token context length is insane! I’m really curious to see how these new features will change app development. It seems like coding with GPT-4.1 could be super efficient. I wonder when we’ll get access through an interface instead of just API.

Absolutely, the expanded context length opens up so many possibilities for developers! While I don’t have a specific timeline, I’m sure direct interfaces are on their roadmap to make it even more accessible. Exciting times ahead for app development and coding workflows! Thanks for your great question.

Wow, the 1-million-token context length is insane! I’m really curious to see how these improvements play out in real-world applications, especially with coding tasks. It’s cool that OpenAI is pushing the boundaries, though access via API feels limiting for individual users like me.