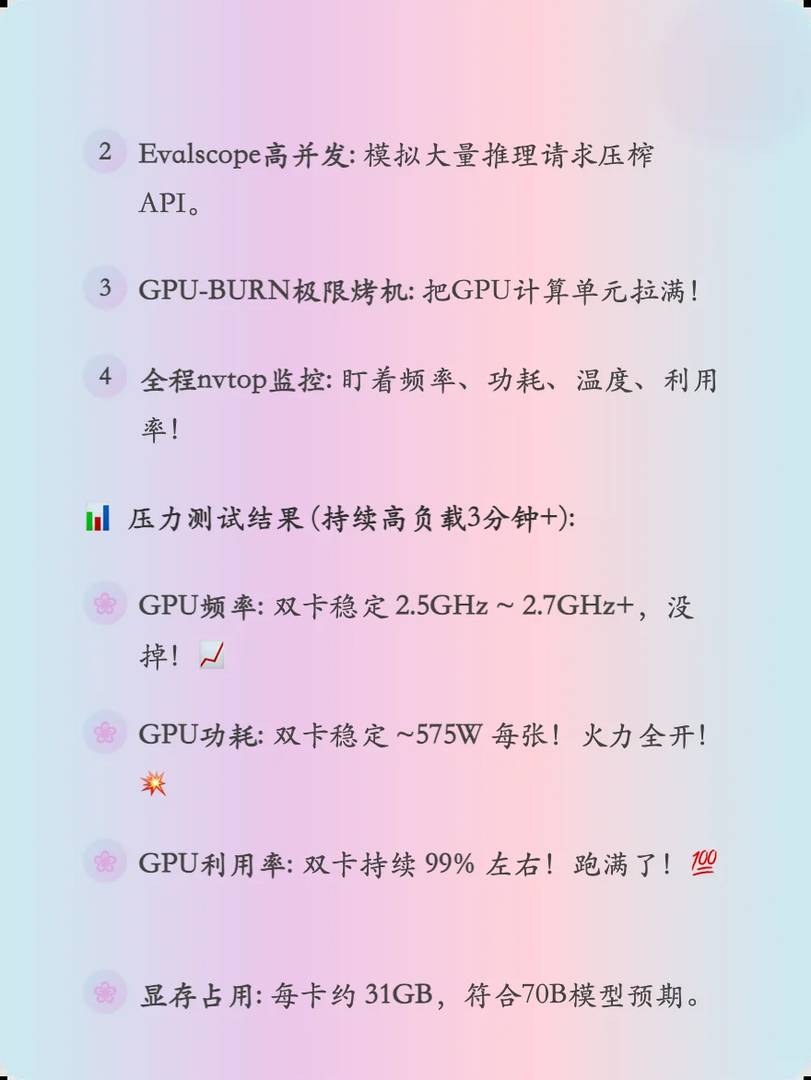

Hey AI enthusiasts and tech trailblazers! 🚀 I’ve just unlocked the ultimate dream machine – a powerhouse workstation rocking dual RTX 5090 GPUs! Couldn’t wait to put this beast through its paces with cutting-edge AI inference tests on Ubuntu. 🔥 Curious about framework showdowns and performance breakthroughs? Buckle up – this post is your golden ticket to all the juicy details!

Wow, that dual-GPU setup sounds insane! I didn’t realize the speed gains would plateau so quickly with certain frameworks. Have you tried testing any older models to see how they benefit from the extra power? Super curious about real-world application differences.

Wow, that dual-GPU setup really pushes the limits! I’m blown away by how much faster it is compared to a single GPU, especially with those optimized frameworks. Have you noticed any bottlenecks when scaling models beyond a certain size?

Absolutely, scaling beyond a certain size can introduce bottlenecks like communication overhead between GPUs or memory bandwidth limitations. It’s fascinating to explore these trade-offs and optimize accordingly. Thanks for your insightful question! I always enjoy hearing from readers who dive deep into the technical details.